ISP Routing Hell

Weird Routes?

History:

So I wasn't really sure how to start this post, but I guess some back story. Many in the IT world know consumer ISPs (internet service providers) like ATT, Comcast, Charter (now spectrum), all have a weird history of "you can't prove there's a problem because we don't escalate it properly, now you're stuck with this while we replace your router 200 times because it can't be on us" sort of problems. I switched to spectrum because in my area its the only non-att fiber lines, and ATT couldn't tell me why my router had an ssh server listening on it. They couldn't think it was compromised or could be compromised, they couldn't tell me anything they just replaced it. Then replaced it again. Then again. After 2 years of doing that, I just had enough. Later that year after leaving ATT, https://en.wikipedia.org/wiki/Salt_Typhoon. But sadly, despite my contempt for ATT by this point, this post isn't about them. More often than not, when you have an issue with ISPs like this, they simply refuse to listen. Today's story is about a surprising success in resolving issues.

Another slight back story, routing protocols like OSPF and EIGRP have, for at least 20 years, had known misconfigurations and bad practices that if done can lead to misrouted information or allowing customers (in an isp environment) to arbitrarily set routes that get cached and reused, or ya know, just lots of these types of "we have the technology to choose the best routes, but we accidentally allowed ___ to happen, which was not the best route." In some cases, these issues include things like routing loops, or creating routing trees that shouldn't exist. What that all looks like to a computer inside those networks, is having routes that make no sense, routing loops that never end, or redirected routing that shouldn't ever go through the middle stage that it does.

An Accidental Discovery

I frequently use k8s and k3s clusters, so I tend to have many ips available to me directly at any time. So, when doing some bug bounty hunting, a scanner I was using found some vulnerabilities for a subdomain that was a cname (dns redirection basically) for something else. Which that cname then pointed to a private 172. ip, I was wondering if I was something in my containers, or my system, or from a vm or something like that. So logically, decided to traceroute this IP address. What ensued kind of didn't shock me, but also isn't what I'd expect to find in 2025. Did you read above where I mentioned routing problems, weeeelll...

1: SBE1V1K.lan 3.142ms

2: vlan-200.ana05wxhctxbn.netops.charter.com 7.075ms

3: lag-56.wxhctxbn01h.netops.charter.com 8.494ms

4: lag-38.rcr01dllstx97.netops.charter.com 12.345ms

5: lag-100.rcr01ftwptxzp.netops.charter.com 13.750ms asymm 4

6: lag-6.bbr01rvsdca.netops.charter.com 50.344ms

7: lag-1-10.crr03rvsdca.netops.charter.com 49.355ms asymm 8

8: lag-305.crr02rvsdca.netops.charter.com 49.280ms

9: lag-305.crr02mtpkca.netops.charter.com 60.820ms

10: lag-10.crr02mtpkca.netops.charter.com 50.756ms asymm 9

11: lag-320.dtr04mtpkca.netops.charter.com 47.263ms asymm 10

12: int-4-3.acr02mtpkca.netops.charter.com 52.721ms asymm 11

13: 172.27.52.188 59.896ms reached

My ISP involved in this was spectrum fiber. This is not any of these other routing issues spectrum has been recently known for as far as I'm aware (google search on reddit). As far as I know I'm the first to escalate this problem to them. To explain this, lets think of it this way. #1 there is my router, the target (172.27.52.188) is 12 hops away. This ip address, reachable from my network, is someone's ip camera system. There are 1000s of ips I was able to reach this way.

Some examples (these were shared with spectrum even before they told me it couldn't be escalated):

Some examples (these were shared with spectrum even before they told me it couldn't be escalated):

Unprotected router (edited to remove details):

Vulnerable ILO version that also exposed a hostname:

ADVA equipment

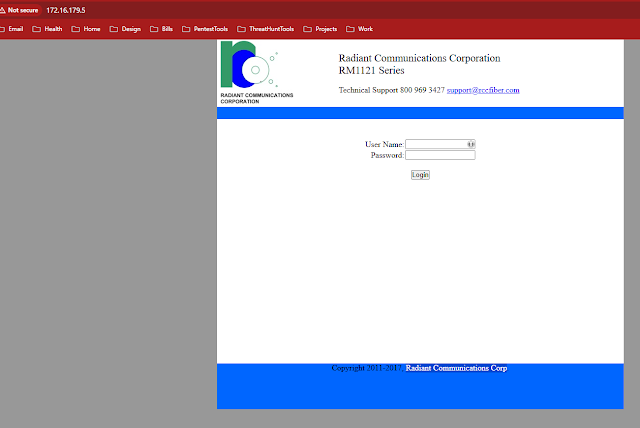

Radiant RM1121 (CATV system)

Bringing to attention #1

As might be expected, trying to get attention to this was like pulling teeth. At first wasn't so bad though. First off, worth mentioning, their site doesn't list any vulnerability disclosure that I could identify nor do they have any contact information outside of chat, or social media chats. I attempted to escalate this via their chat. I reported the first one I found, because it was found on accident, they gave some generic "I'm submitting this." Didn't really say who to, but I just kind of left it alone, hoping that would be changed soon. If this was the end of the story, this post would have been more about how I made a tool to detect these issues and that be that, sadly, it wasn't.

Detection tool

So, in theory, if the route is towards 172.16.0.0/12, and it's routing outside of my broadcast domain, through my primary route, that means its an inappropriate route for my environment. I wonder just how many are exposed like this. Lets build a tool for this.

To start with, lets just first run nmap to do similar. Nmap is a well respected name in networking tools for many years and would be ideal to know how to use the trusted sources first. What I like about nmap's output, is it shows a traceroute, but then if there is matching routes, it shortens it with "Hops x-y are the same as for {whatever}". That's a huge advantage as you can see common patterns. If you take these traceroutes and map the overlay by collecting those scenarios, you could troubleshoot to identify where a route is having problems.

nmap -sn --traceroute 172.16.0.0/12 -oN nmap-output

...

Nmap scan report for 172.16.0.9

Host is up (0.061s latency).

TRACEROUTE (using proto 1/icmp)

HOP RTT ADDRESS

1 3.16 ms SBE1V1K.lan (192.168.1.1)

2 9.05 ms vlan-200.ana05wxhctxbn.netops.charter.com (159.111.244.13)

3 5.84 ms lag-56.wxhctxbn01h.netops.charter.com (96.34.112.232)

4 8.74 ms lag-38.rcr01ftwptxzp.netops.charter.com (96.34.130.232)

5 17.91 ms lag-806-10.bbr01dllstx.netops.charter.com (96.34.2.32)

6 34.17 ms lag-809.bbr02atlnga.netops.charter.com (96.34.1.204)

7 33.32 ms lag-7.bbr01atlnga.netops.charter.com (96.34.0.24)

8 59.84 ms lag-806.bbr01blvlil.netops.charter.com (96.34.0.37)

9 58.04 ms lag-808-10.crr01blvlil.netops.charter.com (96.34.2.109)

10 58.20 ms lag-300.crr01olvemo.netops.charter.com (96.34.76.176)

11 58.30 ms 172.16.0.9

Nmap scan report for 172.16.0.10

Host is up (0.0028s latency).

TRACEROUTE (using port 80/tcp)

HOP RTT ADDRESS

1 ...

2 5.18 ms 172.16.0.10

Nmap scan report for 172.16.0.11

Host is up (0.060s latency).

TRACEROUTE (using proto 1/icmp)

HOP RTT ADDRESS

- Hops 1-10 are the same as for 172.16.0.9

11 57.77 ms lag-100.dtr01olvemo.netops.charter.com (96.34.48.95)

12 60.59 ms 172.16.0.11

--------------------

Or grepping:

grep -i "netops.charter.com\|are the same as for" -B 6 -A 2 nmap-output

Nmap scan report for 172.16.0.9

Host is up (0.061s latency).

TRACEROUTE (using proto 1/icmp)

HOP RTT ADDRESS

1 3.16 ms SBE1V1K.lan (192.168.1.1)

2 9.05 ms vlan-200.ana05wxhctxbn.netops.charter.com (159.111.244.13)

3 5.84 ms lag-56.wxhctxbn01h.netops.charter.com (96.34.112.232)

4 8.74 ms lag-38.rcr01ftwptxzp.netops.charter.com (96.34.130.232)

5 17.91 ms lag-806-10.bbr01dllstx.netops.charter.com (96.34.2.32)

6 34.17 ms lag-809.bbr02atlnga.netops.charter.com (96.34.1.204)

7 33.32 ms lag-7.bbr01atlnga.netops.charter.com (96.34.0.24)

8 59.84 ms lag-806.bbr01blvlil.netops.charter.com (96.34.0.37)

9 58.04 ms lag-808-10.crr01blvlil.netops.charter.com (96.34.2.109)

10 58.20 ms lag-300.crr01olvemo.netops.charter.com (96.34.76.176)

11 58.30 ms 172.16.0.9

--

Nmap scan report for 172.16.0.11

Host is up (0.060s latency).

TRACEROUTE (using proto 1/icmp)

HOP RTT ADDRESS

- Hops 1-10 are the same as for 172.16.0.9

11 57.77 ms lag-100.dtr01olvemo.netops.charter.com (96.34.48.95)

12 60.59 ms 172.16.0.11

-- (some excerpts removed for readability) Now to my tooling made for this. The primary function I need is a way to get all the ip addresses in that range, and to be able to analyze traceroute responses for exiting local network. I also realized there may be other problems than just that 172 range, so I added the goal of searching all private only ranges that should never be routing outside of a local network.

A sub-task for getting these traceroutes, is to identify my local network. Scripting this part was kind of weird because apparently, nearly every python tool for doing this relies on linux-only /proc/net/arp or running arp -a on linux or windows. So I cloned the arp -a route and gave a regex to pull out ip addresses. I then took the the ip ranges and broke them into individual ips, checked if the ips are in the arp table, if not then try to traceroute them. This basically means, if they're not in my local network do a traceroute.

If the traceroute then contained something that wasn't in the arp table, list as as possible. This would mean if the traceroute doesn't die at my router, give me the ips that it does show. Then after a few rounds of hammering out how this would work or not work, and the slowness of that, I got this:

A sub-task for getting these traceroutes, is to identify my local network. Scripting this part was kind of weird because apparently, nearly every python tool for doing this relies on linux-only /proc/net/arp or running arp -a on linux or windows. So I cloned the arp -a route and gave a regex to pull out ip addresses. I then took the the ip ranges and broke them into individual ips, checked if the ips are in the arp table, if not then try to traceroute them. This basically means, if they're not in my local network do a traceroute.

If the traceroute then contained something that wasn't in the arp table, list as as possible. This would mean if the traceroute doesn't die at my router, give me the ips that it does show. Then after a few rounds of hammering out how this would work or not work, and the slowness of that, I got this:

import logging

logging.getLogger("scapy.runtime").setLevel(logging.ERROR)

from scapy.all import *

import ipaddress, netifaces, re

import concurrent.futures

from concurrent.futures import ThreadPoolExecutor, ProcessPoolExecutor

routes=[]

gateways=netifaces.gateways()

for i in gateways['default']:

routes.append(gateways[i][0][0])

def arpips():

ips = []

try:

with os.popen('arp -a') as f:

arp=f.read()

for line in re.findall(r'\b(?:(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\b', arp):

ips.append(line)

except:

pass

return ips

def loop(addr):

#print("test: %s" % str(addr))

ans, unans = traceroute(str(addr),verbose=0)

suspicious=[]

try:

for i in ans:

ipaddr=i.answer.src

if ipaddress.ip_address(ipaddr).is_private == True:

if ipaddr not in routes:

suspicious.append(ipaddr)

except:

pass

return set(suspicious)

def rangeloop(r):

print("Starting Range: %s" % r)

for addr in ipaddress.IPv4Network(r):

with ThreadPoolExecutor(max_workers=10) as traceexecutor:

tracefutures=[]

if str(addr) not in arp:

tracefutures.append(traceexecutor.submit(loop, addr))

for f in concurrent.futures.as_completed(tracefutures):

if len(f.result()) != 0:

print("Possible: %s" % f.result())

arp=arpips()

ranges=['192.168.0.0/16', '172.16.0.0/12', '224.0.0.0/4']

with ProcessPoolExecutor(max_workers=3) as rangeexecutor:

rangefutures=[]

for r in ranges:

rangeexecutor.submit(rangeloop, r)

concurrent.futures.as_completed(rangefutures)

Tool pitfalls:

There are some issues using this tool, mostly that trying to span all of these ips at once, is extremely difficult, time consuming, and there isn't really a good way speed it up without crashing due to memory usage problems. Which I ran into. A lot. IE: this should likely be done on a much lower level or with more direct memory controls. So this version of the script is what got hammered out after playing with resources a bit to see what works and doesn't. It took roughly 10 hours to show the first response, which was a 172.16 ip. So, you could imagine getting past the first 65536 IPs takes around 10 hours. If I just left this as the 172. ip range only, those could start being enumerated much faster. If you want to use this to test your own network, maybe try to spin up a raspberry pi and run this in the background and pipe to a log. Creating this was curiosity, I may make more adjustments to it or rewrite it later. For the time being, this is where I'm at with it.

Bringing to attention #2:

Reached back out to them to let them know that more has been found. Not just a few, not just one, a lot. I wound up stopping the search at 1103, which was at 172.16.191.71 being the last found. to be clear, there is still potentially many many thousands left. I just couldn't leave my laptop running this scan for so long so it only validated what it could before I had to stop it. Anyway, the conversation went a different way this time around. Me saying there's a lot of these turned into they refused to escalate it "We are not able to escalate this issue with trace being to a private IP Address". Despite showcasing the routes repeatedly going outside of my home network, and that it's treating the private IP like it's public, this is the stance they repeatedly took.

As the conversation went on, I was asked to hook my laptop directly to the modem and perform a public trace route to show the error. I took this as they didn't understand so I went ahead and did this to the ips involved, because any public ip would be not relevant to the problem. Surely this would show them the problem. I got a public ip, I'm trying to traceroute to a private ip, its traversing multiple multiple hops, aaaand nope. They didn't care.

Bringing to attention #3

Due to lack of technical competence they were willing to show this problem, I went ahead and moved on to reporting to a CERT vulnerability reporting party. For this, I initially used this reporting here https://www.kb.cert.org/vuls/report/ and included data such as intended reporting timeline for this blog post, and all the details I could about how this could be used by a threat actor. I also was sure to give them a copy of the discussion so its known that spectrum's policy prevented them from escalating a problem to be looked into. Side note, I do fully understand those types of issues, because bigger businesses have to assume at some level people don't know what they're talking about by default in an effort to streamline support tasks. The problem here is that the route showed public end points between private networks, within spectrum's routers. The trace route details, therefore, are enough to know there is a problem by themselves without needing bilateral, inside out, communication paths.

Results: cert team refused to handle it, due to their focus being on critical infrastructure, and "we don't usually do this for active websites, you should work that out with them first" basically.

So, I guess we'll see if hacker one will help. Their disclosure assistance program lists charter as a disclosure entity but they might also not take this, as they assist primarily where active exploitation is occurring or critical issues (https://hackerone.com/disclosure-assistance?type=team). After 8 days, nothing at all from doing that.

Bringing to attention #4

I also went back and updated a few more times for the facebook chat things that I'd found, and it was recommended to me (based on reading about how other people report things) that if I wanted to publish a blog post about an active vulnerability, ask their media contacts. So I went over to (https://corporate.charter.com/media-contacts), found regional contacts and send them an email. A day or so later (June 22) I had a morning email come in saying they received my recent feedback and asked for a contact number. So provided that and was called within an hour. Call was pretty brief, super professional. They basically said its beyond their scope so they'd escalate it to the NOC if I can send them evidence via email. Apparently, they explained, the chat logs only show up as "stars" (****) for IPS , so they can't even see what's happening. So I got right on writing that up to ship it off.

The email:

The next day I was called back by the same individual who informed me they did escalate and it's being reviewed but because they said they would call me back they made sure to do so about it.

Resolution:

Got a call on June 25th 2025, saying that their NOC escalation did come to a resolution. I don't recall exactly what they said the solution was but it sounded roughly like access rules were put in place appropriately. I didn't record this call so I can't go back and confirm the wording, sadly.

When asked if they have issues with me posting this blog post now that it's resolved, I was told they don't really have any say in that so it's on me. Therefore, posting accordingly.

Lessons learned:

I always like to do lessons learned whenever possible to try to understand what went right and wrong. Its a good way to try to avoid pitfalls in the future and to level up for next time.

So lets do a right side first:

- Spectrum has policies in place for when to escalate, with conditions. Just cause I disagree with what their policy is, doesn't mean I can't be grateful they have a policy in place at all.

- Found weird problem and attempted to report instead of sitting on it, or trying to exploit it unannounced.

- I asked them for permission to write a blog about this instead of just publicly announcing it while exploitable.

- Reporting to cert authorities and bug bounty companies aren't really supposed to be used as a middleman if the vulnerable platform refuses to investigate or look into a problem. But they are at least a resource to use after trying. So less stress, more success. I'm counting this as a good thing even though it failed.

- Everyone at spectrum seemed respectful and dutiful, in spite of my troubles escalating.

Now lets go for the bad side:

- I repeatedly typo'd during the chat. This turns into a really unprofessional look.

- I got frustrated that they just simply stopped responding to the chat instead of attempting to investigate. Makes me feel I should have let it run its course and remembered there are other alternatives if they refuse to investigate. At the end of the day, I'm just here doing my thing, its not my responsibility to ensure solutions, even if I want them to be safer and fix it.

- Enumerate before reporting. If I had realized there was thousands of these ips, that would have been included in the first time reporting, which may have led to a better experience dealing with this. When I emailed back after being called, I was able to put everything together in a way that I feel like made way more sense and really should be how I approach these things first for the future.

- When doing mass scanning, especially for bug bounties, exclude things from scope. including but not limited to, ip ranges, specific subdomains, etc... If you can't (tool doesn't allow it?), don't automate those scans yet. Instead, parse before scanning. This is generally good practice anyway to be honest, I was just lazy and it turned into a lot of nonsense.

- Accidentally stumbling on something bigger, isn't necessarily good. In fact, it means I need to up my game, in this case.

Total Timeline:

- June 13: reached out about original one weird route I found, told it would be escalated

- June 15: reached back out via facebook for finding substantially more of these. Same date, was told they couldn't escalate due to needing traceroutes to a public ip.

- June 17: last response via facebook "...We are not able to escalate this issue with trace being to a private IP Address..." Same date, tried to contact cert.org (VRF#25-06-CKDXS), was told I should deal directly with the vendor (part of the escalation indicated they refused escalate it up to this point). Same date, later in the day, created hackerone disclosure assistance report (3205453).

- June 20: Contacted media contacts

- June 22: I was contacted for escalated support issue

- June 23: Called back to let me know it was being escalated

- June 24: First day checked and found routing returned to normal

- June 25: Call confirming resolved

Comments

Post a Comment